OPTIMIZATION

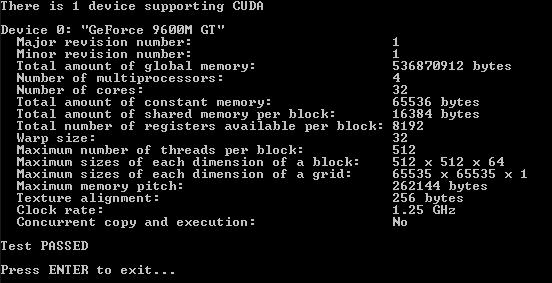

The following is the output of CUDA device query for my device.

The optimization was achieved over the following phases:-

Phase 1 :- Get it all together

Since I used the code from recursiveGaussian and matrixMul sample projects in NVIDIA CUDA SDK, the first step was to just get the code together and get it to work.This code takes around 200 ms to run.The calculation doen't include the image processing part. This result is comparable to MATLAB which takes around 180-190 ms to do the same with image processing calculation involved. The code can be downloaded from the following file.You would need CUDA to run it on your machine.

Also you will need to add glut.h, glut32.lib,

glut32.dll, GL/glew.h, glew32.lib, glew32.dll to the project directory,

and add glew32.lib and glut32.lib to Object/library modules inside

visual studio.

Phase 2 :- A proper design

In this phase I gave a proper design to my algorithm and code so as to get a better GPU implementation.The result was a gain of 10ms , and this one includes the calculation for image processing part also. Still the resulting implementation takes around 185 - 190 ms. Not Good!!

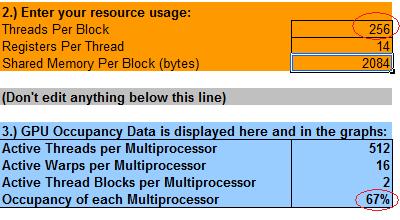

On the left is the output from .cubin file and the right hand side shows the CUDA occupancy calculator, showing just 25% GPU occupancy. This was major hurdle in performance optimization

THE SOLUTION :- So to solve this problem I employed a clever scheme of padding the input and kohonen_weights matrix with 0's so that I no had input size as 262144 x 16 and kohonen_matrix size as 16 x 262144 so as to get the final matrix as 16 x 16 , out of which I extract a 5 x 1 matrix representing the kohone layer. This matrix has one element corresponding to winning neuron as 1 and rest as 0.

Another important step taken towards optimization was the removal of grossberg layer altogether. This is because I figured out that I can get the output count from the kohonen layer's firing neuron rather than getting it finally from grossberg layer.Thus this also helped towards optimization. It didn't give me a major gain since its a small calculation involving multiplication between 4 x 5 and 5 x 1 matrix.

Thus following this approach I was able to get the processing time to 65 - 75 ms. A gain of around 120 ms.

I tried using othe block sizes but using 16 x 16 I got the best results.

Phase 4 :- Image processing optimization - 1D to 2D

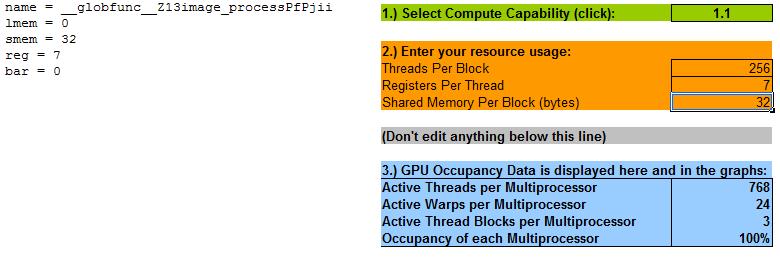

I was following the same approach used in the recursive gaussian example till now of processing the image in a 1D fashion. So I switched over to a 2D implementation and also got shared memory in the picture.This was done after consider the following:-

The resulting gain was around 10 ms. But the occupancy was already 100% and the changes I made also kept it at 100%, so occupancy was not the affecting factor.Shared memory played the role in optimization here.

Phase 5:- Final Optimization - Reducing the number of CPU -GPU transfers

According to the NVIDIA CUDA PROGRAMMING GUIDE, its very important to design the algorithm in a way that number of CPU - GPU transfers are reduced, even if it means an increase in calculations.So intermediate data structures which are generated should be kept at GPU side only.

So till now what I was doing was to get the Image to the GPU , process it and then send the output array to CPU.

Then I padded it with 0's at the CPU and then sent the result ing array back to GPU as input for the neural network.

What I did here was to do the padding on the GPU side only, which meant 2 less GPU-CPU transfers.

This finally brought my computation time to 40 - 45 ms.

A gain of around 20 ms over the previous phase.

Thus starting from a 200 ms I was able to get the final computation time to be 45 ms. A five fold improvement !!